Welcome to The Movement Lab!

The goal of our lab is to create coordinated, functional, and efficient whole-body movements for digital agents and for real robots to interact with the world. We focus on holistic motor behaviors that involve fusing multiple modalities of perception to produce intelligent and natural movements. Our lab is unique in that we study “motion intelligence” in the context of complex ecological environments, involving both high-level decision making and low-level physical execution. We developed computational approaches to modeling realistic human movements for Computer Graphics and Biomechanics applications, learning complex control policies for humanoids and assistive robots, and advancing fundamental numerical simulation and optimal control algorithms. The Movement Lab is directed by Professor Karen Liu.

Learnable Physics Simulators for Humans, Robots and the World

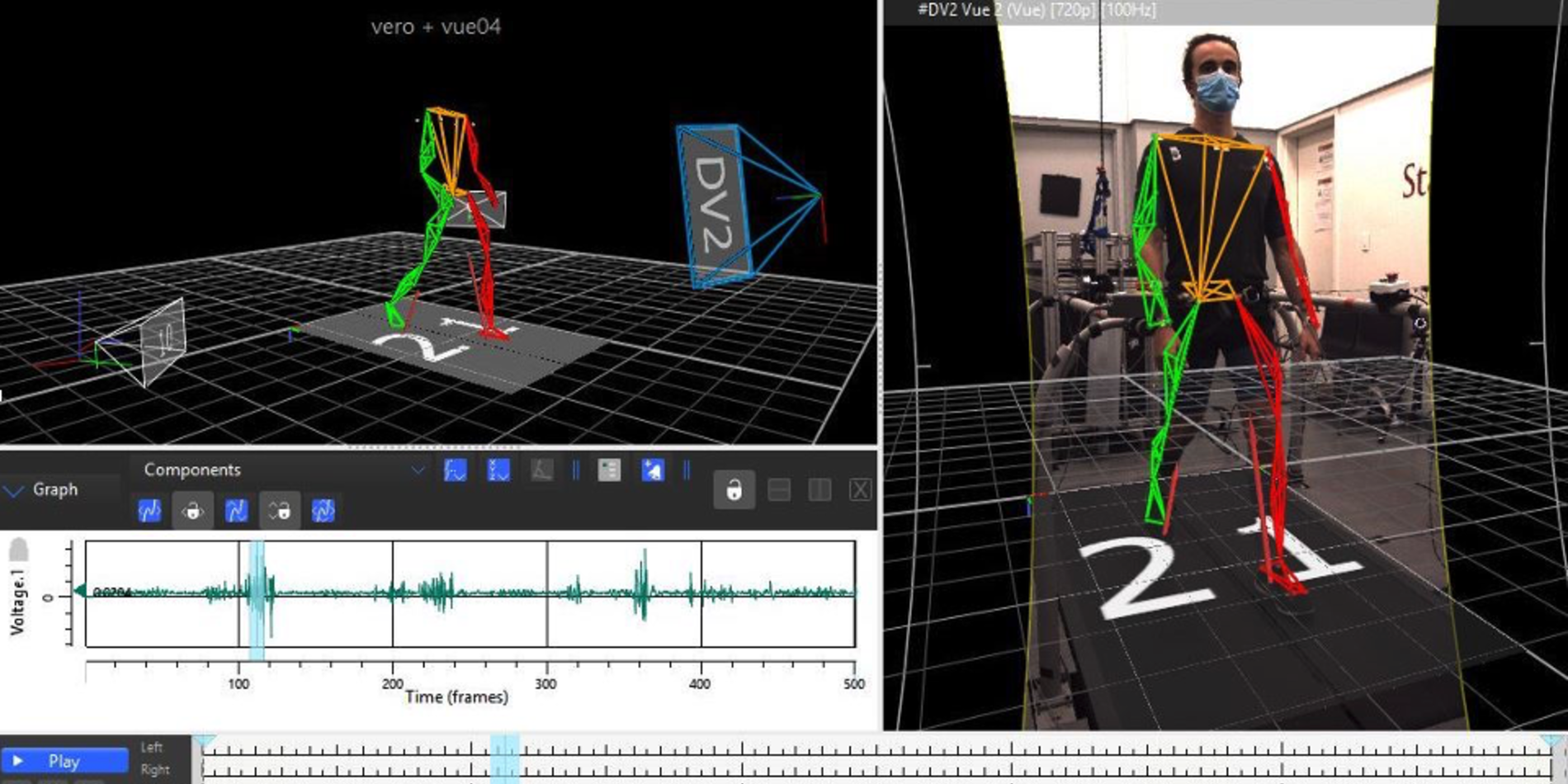

Physics simulation is increasingly relied upon to predict the outcome of real-world phenomena. The rise of deep learning further augments the importance of physics simulation for training intelligent robots and embodied AI agents in safe and accelerated simulated environments. Our lab has created a number of physics simulation tools and algorithms that leverage both differential equations and measured data for building accurate simulation models of humans, robots, and the world they interact with.

Physical Human Robot Interaction

AI-enabled robots have the potential to provide physical assistance that involves applying physical forces to human bodies. This capability will transform healthcare in our aging society and allow older adults to be independent, stay in their homes longer, and have better quality of life. We develop simulation tools and control algorithms to facilitate research in the field of pHRI. We aspires to build intelligent, safe, and ethical machines that enhance our sensing and actuating capabilities, but never take away our autonomy to make decisions.